Unit 3 - Notes

CSE316

Unit 3: Process Synchronization

1. Process Concepts and Relationships

Concurrent Processes

Concurrent processes are processes that execute simultaneously in a system. They can be:

- Independent Processes: Execution of one does not affect the execution of another.

- Cooperating Processes: Execution of one can affect or be affected by other processes. These processes share resources (memory, code, or data).

Reasons for allowing process cooperation:

- Information Sharing: Access to the same file or database.

- Computation Speedup: Breaking a task into subtasks that run in parallel (requires multiple CPUs).

- Modularity: Dividing system functions into separate processes/threads.

- Convenience: A user performing multiple tasks at once (e.g., editing and printing).

Hierarchy of Processes

In most OSs, processes are organized hierarchically.

- Parent Process: The process that creates another process.

- Child Process: The newly created process.

- This creates a tree structure. In UNIX, the root is the

initprocess.

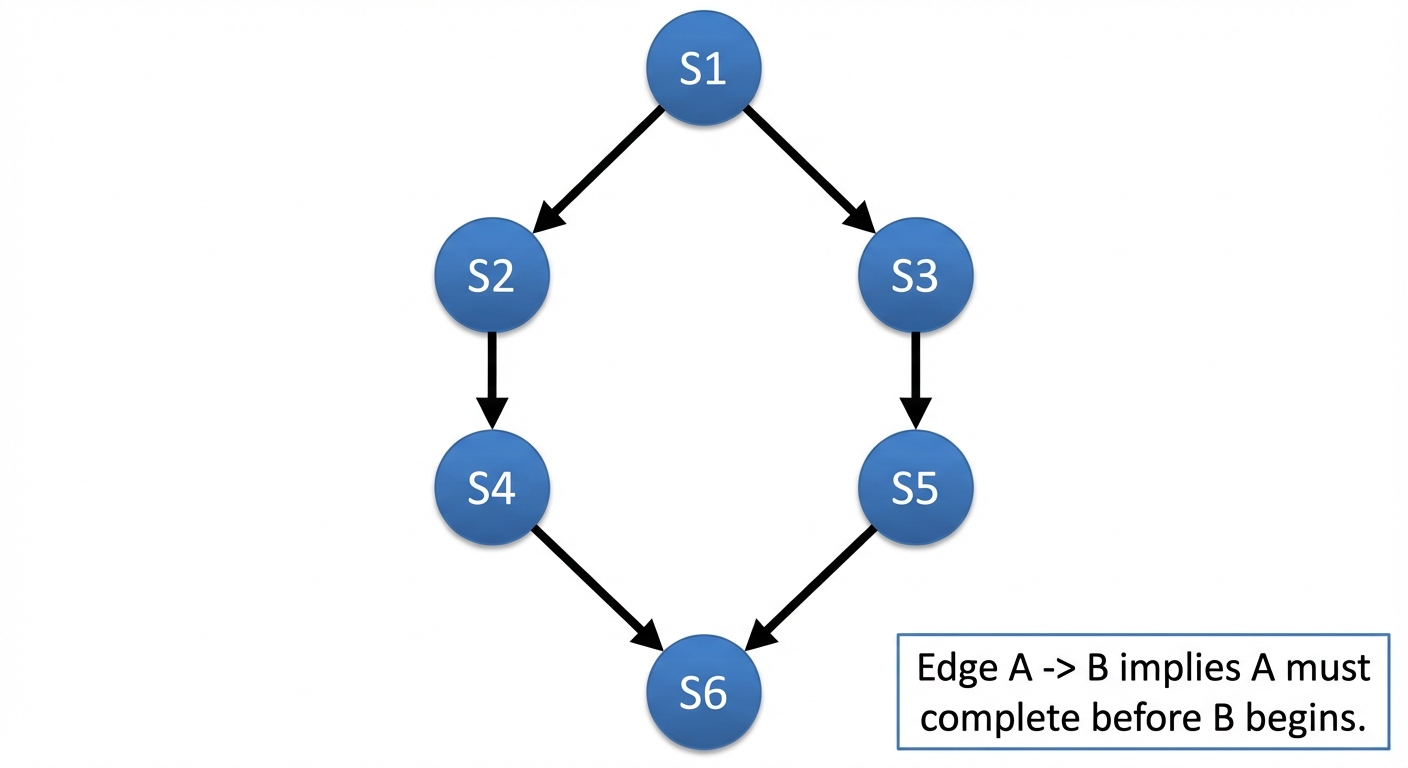

Precedence Graph

A precedence graph is a Directed Acyclic Graph (DAG) used to represent the execution dependencies between concurrently executing statements or processes.

- Nodes: Represent individual statements or tasks.

- Edges: Represent execution precedence (Edge means must finish before starts).

2. The Critical Section Problem

Race Condition

A situation where several processes access and manipulate the same data concurrently, and the outcome of the execution depends on the particular order in which the access takes place.

The Critical Section

A code segment in a process where shared resources (common variables, files, tables) are accessed.

- Entry Section: Code requesting permission to enter the critical section.

- Critical Section (CS): The actual code accessing shared data.

- Exit Section: Code indicating the process has left the CS.

- Remainder Section: Remaining code.

Solution Requirements

To solve the Critical Section Problem, a solution must satisfy three criteria:

- Mutual Exclusion: If process is executing in its critical section, no other processes can be executing in their critical sections.

- Progress: If no process is executing in its critical section and some processes wish to enter, only those processes not in their remainder sections can participate in deciding which will enter next, and this selection cannot be postponed indefinitely.

- Bounded Waiting: There must be a limit on the number of times other processes are allowed to enter their critical sections after a process has made a request to enter and before that request is granted.

Classical Two-Process Solution: Peterson’s Algorithm

A software-based solution for two processes ( and ) that ensures all three criteria. It uses two shared variables:

int turn: Indicates whose turn it is to enter.boolean flag[2]: Indicates if a process is ready to enter.

Algorithm for Process (where ):

do {

flag[i] = true;

turn = j;

while (flag[j] && turn == j); // Busy wait

/* CRITICAL SECTION */

flag[i] = false;

/* REMAINDER SECTION */

} while (true);

N-Process Solution (Bakery Algorithm)

Before entering its critical section, a process receives a number. The holder of the smallest number enters the CS. If numbers are tied, the process with the lower process ID () goes first.

3. Hardware Primitives for Synchronization

Software solutions (like Peterson's) are not guaranteed to work on modern architectures due to instruction reordering. Hardware support is required.

1. Test-And-Set

An atomic instruction (non-interruptible). It reads a boolean value and sets it to true efficiently.

boolean TestAndSet(boolean *target) {

boolean rv = *target;

*target = TRUE;

return rv;

}

Usage: Used to implement a spinlock (Mutual Exclusion).

2. Swap (Compare-and-Swap)

Atomically swaps the contents of two variables.

void Swap(boolean *a, boolean *b) {

boolean temp = *a;

*a = *b;

*b = temp;

}

4. Semaphores

A semaphore is an integer variable accessed only through two standard atomic operations: wait() (or ) and signal() (or ).

Operations

- Wait(S): Decrements the value. If , the process waits (blocks or busy waits).

- Signal(S): Increments the value. If processes are waiting, one is woken up.

Types of Semaphores

- Counting Semaphore: The integer value can range over an unrestricted domain. Used to control access to a resource with instances.

- Binary Semaphore (Mutex): The integer value can range only between 0 and 1. Used for mutual exclusion.

Implementation (Avoiding Busy Waiting)

Instead of "spinning" (while loop), the process can block itself.

- Block: Place the process in a waiting queue associated with the semaphore and switch state to waiting.

- Wakeup: Move a process from the waiting queue to the ready queue.

5. Classical Synchronization Problems

1. The Producer-Consumer Problem (Bounded Buffer)

- Producer: Generates data and puts it into a buffer.

- Consumer: Consumes data from the buffer.

- Synchronization:

- Producer must wait if the buffer is full.

- Consumer must wait if the buffer is empty.

- Access to the buffer pool requires mutual exclusion.

Semaphores used:

mutex(init 1): For exclusive access to buffer.empty(init N): Counts empty slots.full(init 0): Counts filled slots.

2. The Readers-Writers Problem

- Readers: Only read the data (multiple readers allowed simultaneously).

- Writers: Read and write data (exclusive access required).

- Constraint: If a writer is in the CS, no other reader or writer is allowed.

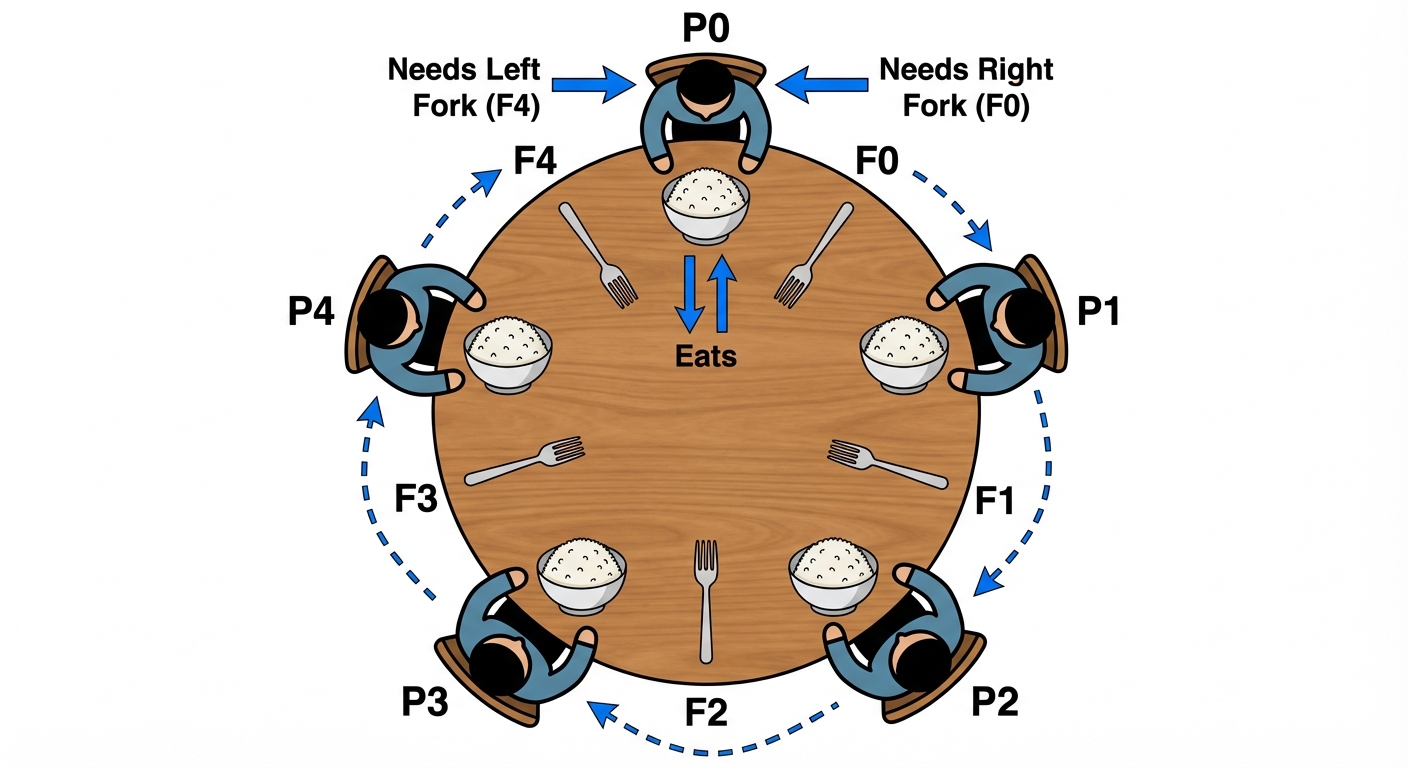

3. The Dining Philosophers Problem

Five philosophers sit at a circular table. Between each pair, there is a single chopstick (or fork).

- Activity: Thinking and Eating.

- Constraint: To eat, a philosopher needs two chopsticks (left and right).

- Issue: Deadlock occurs if every philosopher picks up their left chopstick simultaneously.

Solution: Use semaphores for chopsticks. To prevent deadlock, allow a philosopher to pick up forks only if both are available, or use an asymmetric solution (odd philosophers pick left first, even pick right first).

6. Monitors

A Monitor is a high-level synchronization construct (a programming language data type) that handles synchronization automatically, reducing errors associated with semaphores.

- Structure: A collection of procedures, variables, and data structures grouped together.

- Property: Only one process can be active within the monitor at a time.

- Condition Variables: To allow processes to wait within the monitor, condition variables (

x,y) are used with operations:x.wait(): Suspends the calling process until another process calls signal.x.signal(): Resumes exactly one suspended process.

7. Threads and Multithreading

Definition

A Thread (Lightweight Process - LWP) is a basic unit of CPU utilization. It comprises:

- Thread ID

- Program Counter

- Register Set

- Stack

It shares with other threads belonging to the same process:

- Code section, Data section, and OS resources (open files, signals).

Benefits of Multithreading

- Responsiveness: An application can continue running even if part of it is blocked.

- Resource Sharing: Threads share memory and resources of the process by default.

- Economy: Context switching between threads is faster than between processes.

- Scalability: Threads can run in parallel on multiprocessor architectures.

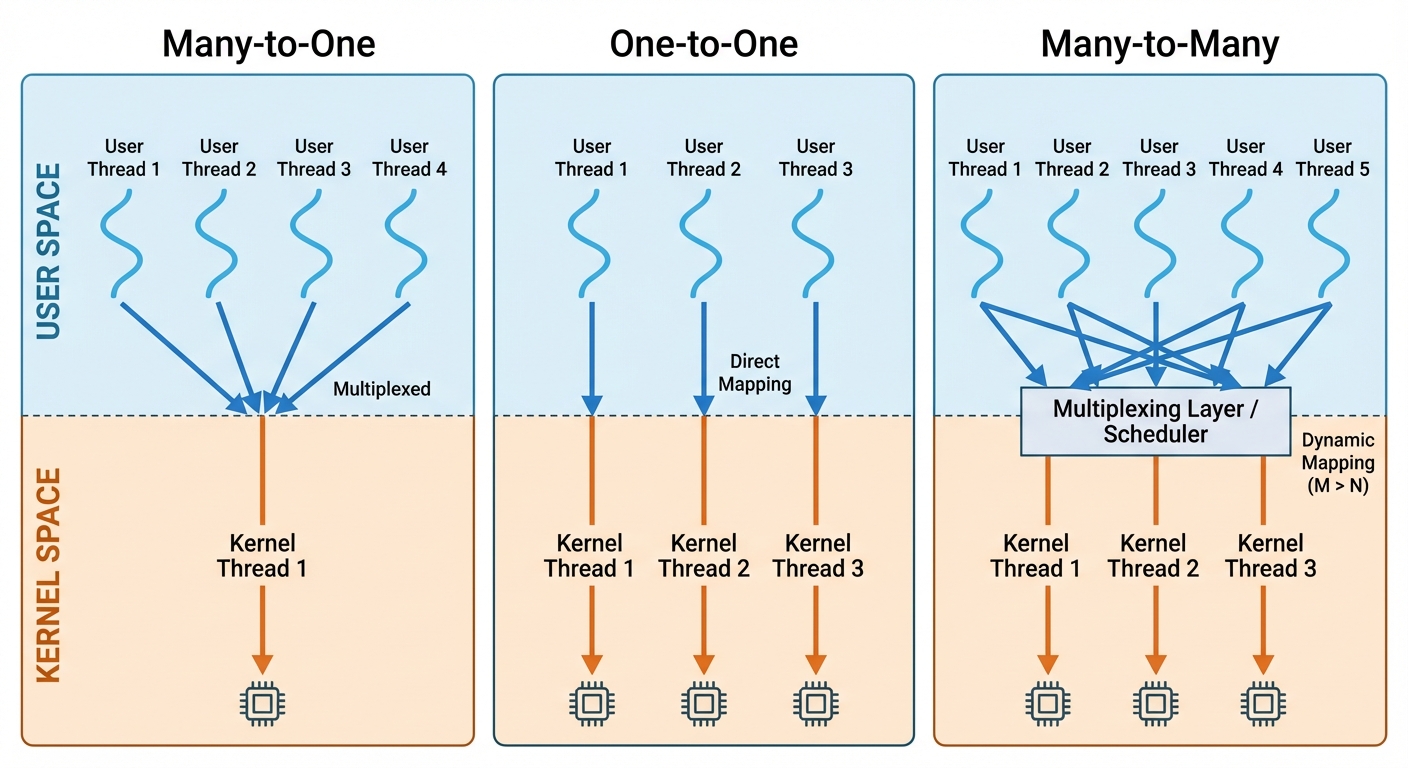

Multithreading Models

How user-level threads relate to kernel-level threads.

- Many-to-One Model: Many user-level threads map to a single kernel thread.

- Pros: Efficient context switching (user space).

- Cons: Entire process blocks if one thread makes a blocking system call. No parallel execution on multicore.

- One-to-One Model: Each user-level thread maps to a distinct kernel thread.

- Pros: High concurrency. Blocking one thread doesn't block others.

- Cons: Creating a user thread requires creating a kernel thread (overhead).

- Many-to-Many Model: Many user-level threads are multiplexed to a smaller or equal number of kernel threads.

- Pros: Flexible. Developers can create many threads, and the OS supports sufficient kernel threads.

Scheduler Activations

A mechanism for communication between the user-thread library and the kernel.

- The kernel provides a set of virtual processors (LWPs) to the application.

- Upcalls: The kernel notifies the application about events (e.g., a thread blocking) so the thread library can schedule another thread.

8. Example of Threaded Programs

Pthreads (POSIX Threads) Example in C

This example creates a thread that calculates the sum of numbers up to .

#include <pthread.h>

#include <stdio.h>

#include <stdlib.h>

int sum; /* shared data */

void *runner(void *param); /* threads call this function */

int main(int argc, char *argv[]) {

pthread_t tid; /* the thread identifier */

pthread_attr_t attr; /* set of thread attributes */

if (argc != 2) {

fprintf(stderr,"usage: a.out <integer value>\n");

return -1;

}

/* get the default attributes */

pthread_attr_init(&attr);

/* create the thread */

pthread_create(&tid, &attr, runner, argv[1]);

/* wait for the thread to exit */

pthread_join(tid, NULL);

printf("sum = %d\n", sum);

}

/* The thread will execute in this function */

void *runner(void *param) {

int i, upper = atoi(param);

sum = 0;

for (i = 1; i <= upper; i++)

sum += i;

pthread_exit(0);

}

Java Threads Example

Threads in Java can be created by extending the Thread class or implementing the Runnable interface.

class MyThread extends Thread {

public void run() {

System.out.println("Thread is running...");

}

}

public class Main {

public static void main(String args[]) {

MyThread t1 = new MyThread();

t1.start(); // Starts the thread and calls run()

}

}