Unit 5 - Notes

INT428

Unit 5: Generative AI & Ethics / Prompt Engineering

1. Fundamentals of Generative AI

Generative AI refers to a subset of artificial intelligence focused on creating new content—including text, images, audio, video, and code—in response to user prompts. Unlike traditional AI, which typically analyzes or classifies existing data, Generative AI uses patterns learned from massive datasets to generate novel outputs that resemble the training data.

1.1 Discriminative vs. Generative AI

- Discriminative AI: Focuses on distinguishing between classes (e.g., "Is this image a cat or a dog?"). It models the probability of a label given data ().

- Generative AI: Focuses on how the data is created (e.g., "Draw a picture of a cat"). It models the probability of the data itself ().

1.2 Key Generative Architectures

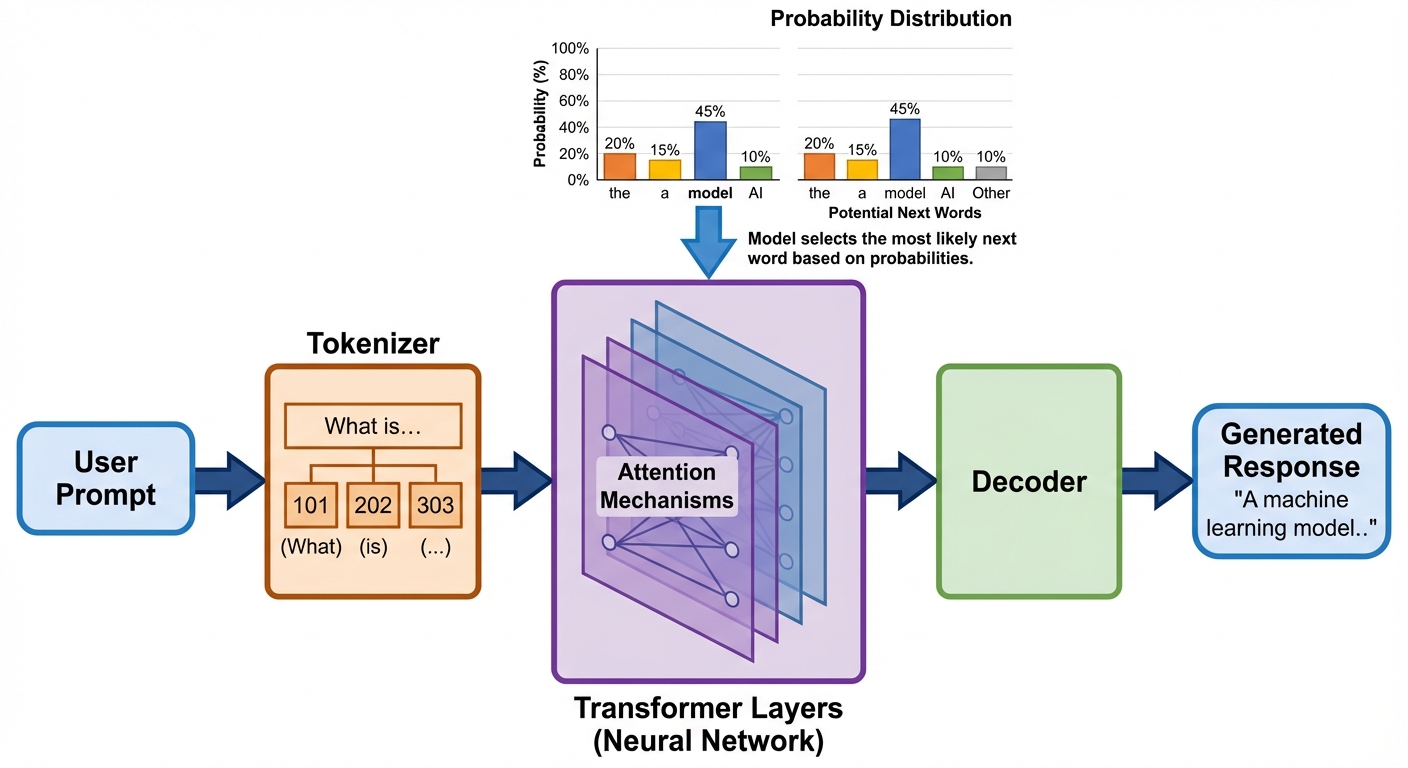

Large Language Models (LLMs)

LLMs are deep learning algorithms that can recognize, summarize, translate, predict, and generate text and other content based on knowledge gained from massive datasets.

- Architecture: Based on the Transformer architecture (specifically the self-attention mechanism).

- Function: They predict the next word (token) in a sequence based on the context of previous words.

- Examples: GPT-4 (OpenAI), Claude (Anthropic), Llama (Meta).

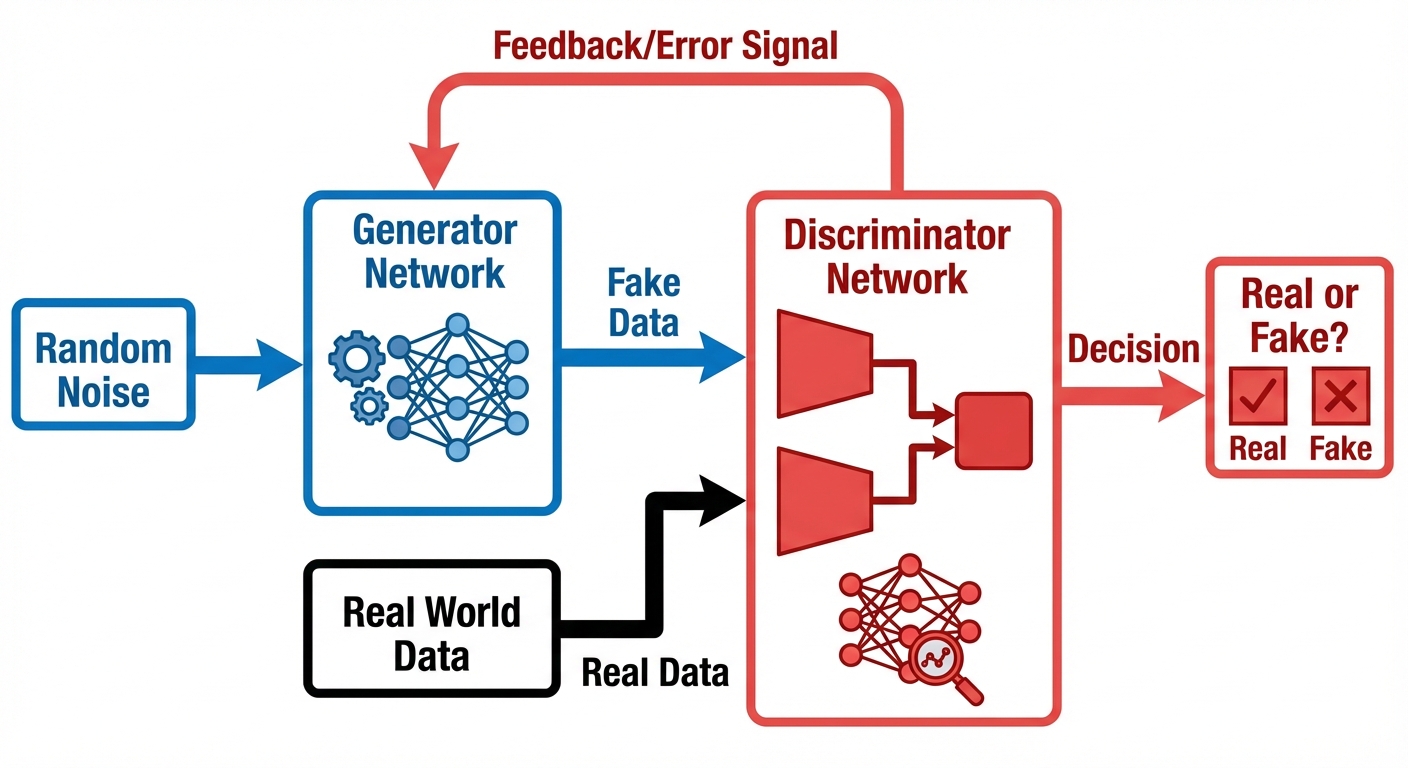

Generative Adversarial Networks (GANs)

GANs consist of two neural networks competing against each other in a zero-sum game.

- The Generator: Creates fake data (e.g., an image) and tries to fool the discriminator.

- The Discriminator: Evaluates data and determines if it is real (from the dataset) or fake (from the generator).

- Outcome: As training progresses, the generator becomes adept at creating photorealistic images.

- Use Cases: Deepfakes, image upscaling, realistic face generation.

Diffusion Models

Diffusion models are the current standard for high-quality image generation.

- Forward Process: Gradually adds Gaussian noise to an image until it becomes random static.

- Reverse Process: The neural network learns to reverse this process, starting from pure noise and gradually denoising it to form a coherent image based on a text prompt.

- Examples: Stable Diffusion, Midjourney, DALL-E 3.

2. Industrial Applications

Generative AI is transforming industries by automating creation and synthesis tasks.

2.1 Content Creation & Marketing

- Copywriting: Generating blogs, social media posts, and ad copy.

- Graphic Design: Creating logos, stock photos, and artistic concepts instantly.

- Video Production: AI avatars for training videos; script-to-video generation.

2.2 Software Development (Automation)

- Code Generation: Tools like GitHub Copilot write boilerplate code, suggest functions, and debug errors.

- Documentation: Automatically generating documentation from codebases.

- Legacy Translation: Converting code from older languages (e.g., COBOL) to modern ones (e.g., Python).

2.3 Customer Operations

- Chatbots: Advanced agents that can handle complex queries rather than just following a decision tree.

- Sentiment Analysis: Summarizing customer feedback trends from thousands of reviews.

3. Ethics and Responsible Use of Generative AI

As GenAI becomes ubiquitous, ethical considerations are paramount to prevent harm.

3.1 Bias and Fairness

Models trained on internet data inherit the biases present in that data (gender, racial, or cultural stereotypes).

- Risk: An image generator might default to showing only men when asked for "images of doctors."

- Mitigation: Diverse training datasets and reinforcement learning from human feedback (RLHF) to align models with safety guidelines.

3.2 Hallucinations

"Hallucination" occurs when an LLM generates factually incorrect information but presents it confidently.

- Cause: LLMs are probabilistic engines designed to predict the next plausible word, not truth engines.

- Impact: Risk of spreading misinformation in news, legal, or medical contexts.

3.3 Intellectual Property (IP)

- Copyright: Debate surrounds whether AI-generated works can be copyrighted and whether training AI on copyrighted works constitutes "fair use."

- Plagiarism: Models might inadvertently reproduce large chunks of training text.

3.4 Malicious Use

- Deepfakes: Realistic video/audio impersonations used for fraud or defamation.

- Phishing: Generating highly personalized and convincing scam emails.

4. Fundamentals of Prompt Engineering

Prompt Engineering is the practice of designing inputs (prompts) for Generative AI tools to produce optimal outputs. It is a necessary skill because AI models are sensitive to how instructions are phrased.

4.1 Overview of Language Models (in context of prompting)

To prompt effectively, one must understand that LLMs are:

- Stochastic: The same prompt may yield slightly different results (controlled by a parameter called Temperature).

- Context-Limited: Models have a "Context Window" (limit on how much text they can remember in a conversation).

- Token-Based: They process text in chunks called tokens (roughly 0.75 of a word), not character-by-character.

4.2 Importance of Prompt Engineering

- Cost Efficiency: Reduces the number of API calls or retries needed to get the right answer.

- Performance: Unlocks reasoning capabilities that the model possesses but doesn't use unless specifically asked.

- Automation: Allows for the creation of reliable workflows where AI output is structured (e.g., JSON) for software integration.

5. Key Elements of a Good Prompt

A high-quality prompt typically contains four components. It does not always need all four, but the more complex the task, the more components are required.

- Instruction: What specific task do you want the model to perform? (e.g., "Summarize," "Translate," "Code").

- Context: Background information or external data to guide the model (e.g., "You are an expert historian specializing in the Victorian era").

- Input Data: The specific content to process (e.g., the actual text of the article to be summarized).

- Output Indicator/Format: How the answer should look (e.g., "Format as a bulleted list," "Return raw JSON," "Limit to 50 words").

The "Act As" Persona

Assigning a persona (Role Prompting) helps the model tap into specific vocabulary and reasoning styles found in its training data associated with that role.

- Example: "Act as a senior Python developer..." vs. "Act as a computer science student..."

6. Prompt Patterns

Prompt patterns are repeatable strategies to solve specific problems.

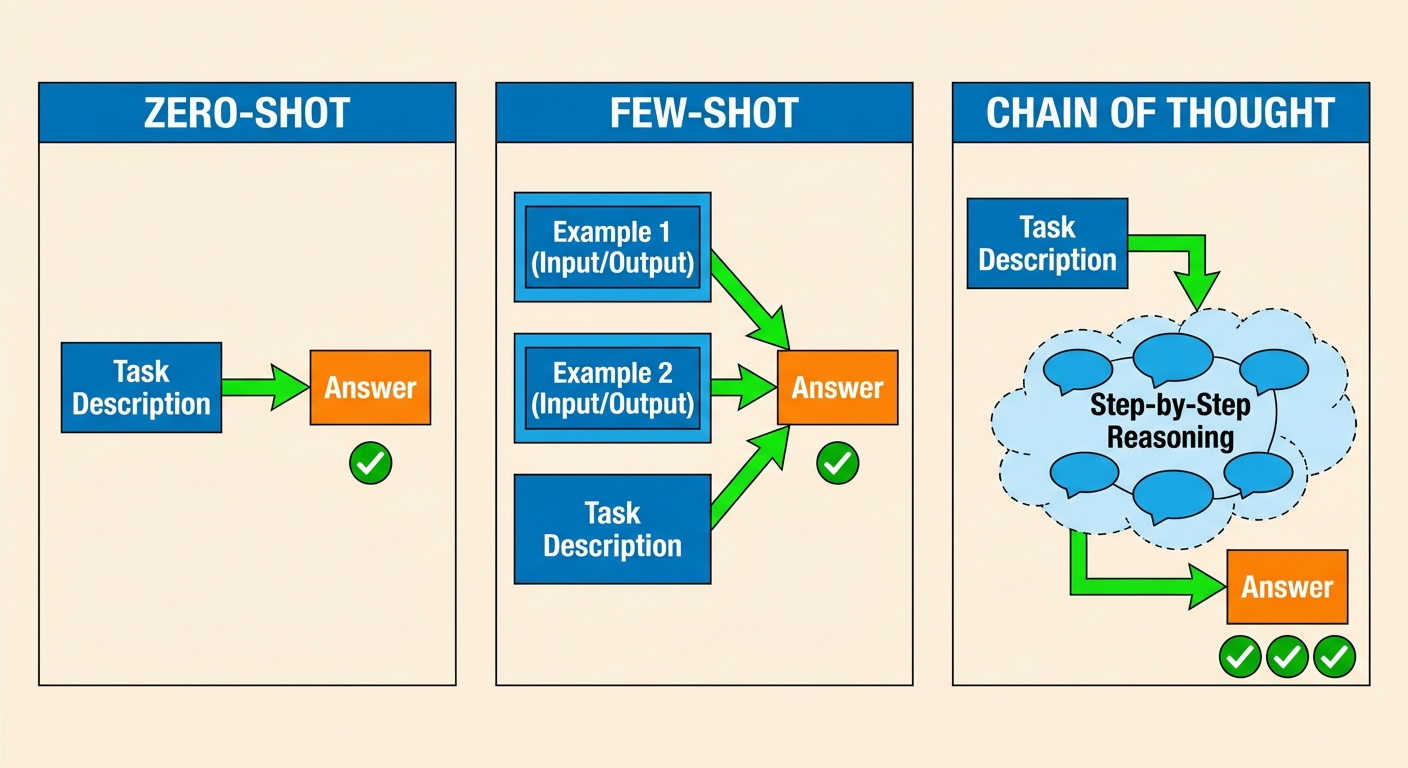

6.1 Zero-Shot vs. Few-Shot Prompting

- Zero-Shot: Providing the prompt with no examples.

- Prompt: "Classify the sentiment: 'The food was cold.'" -> Output: "Negative."

- Few-Shot (In-Context Learning): Providing examples within the prompt to teach the model the desired pattern.

- Prompt: "Great movie -> Positive. Terrible service -> Negative. The book was okay -> Neutral. The app is slow -> [Complete this]"

6.2 Chain-of-Thought (CoT)

Encouraging the model to explain its reasoning steps before giving the final answer. This significantly improves performance on math and logic problems.

- Technique: Appending the phrase "Let's think step by step" to the prompt.

6.3 Retrieval Augmented Generation (RAG) Pattern

While technically an architectural pattern, it influences prompting. It involves injecting retrieved data (from a company database) into the prompt context so the AI can answer questions about private data it wasn't trained on.

7. Prompt Tuning

Prompt Tuning differs from Prompt Engineering. While Engineering is manually crafting text, Tuning is an algorithmic process.

7.1 Hard Prompts (Engineering)

- Discrete, human-readable text tokens.

- Optimized by humans rewriting questions.

- Fixed during model inference.

7.2 Soft Prompts (Tuning)

- Definition: Prompt tuning involves adding a small number of trainable tensors (vectors) to the input of a frozen pre-trained language model.

- Process: Only these extra vectors (the "soft prompt") are updated via backpropagation, while the massive LLM weights remain static.

- Benefit: It is computationally much cheaper than Fine-Tuning the entire model (PEFT - Parameter-Efficient Fine-Tuning). It allows a single model to switch "tasks" simply by swapping the small soft prompt file.