Unit 4 - Notes

INT428

Unit 4: Introduction to deep neural networks / Modern NLP

1. Introduction to Neural Networks

Artificial Neural Networks (ANNs) are computational models inspired by the human brain's biological neural networks. They are designed to identify patterns in data (such as images, sound, and text) that are too complex for rule-based programming.

The Perceptron

The Perceptron is the fundamental building block of a neural network, often called a single-layer neural network. It mimics a biological neuron.

- Structure:

- Inputs (): Data fed into the neuron.

- Weights (): Determine the importance of each input.

- Bias (): Allows the activation function to be shifted (conceptually similar to the intercept in a linear equation).

- Weighted Sum:

- Activation Function: A mathematical function (e.g., Step, Sigmoid, ReLU) that determines if the neuron "fires" (outputs a signal).

- Limitation: A single perceptron can only solve linearly separable problems (e.g., AND/OR gates, but not XOR).

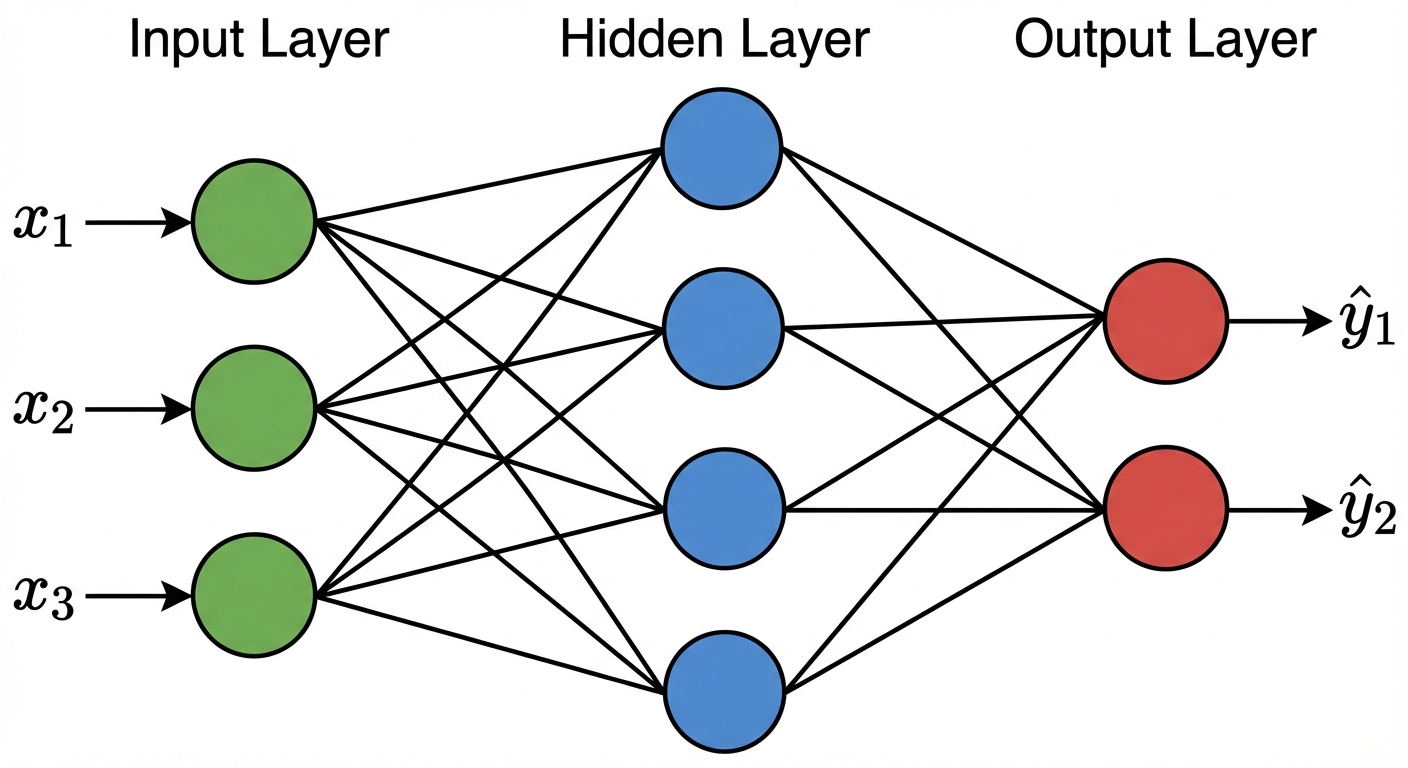

Multi-Layer Perceptron (MLP)

To solve complex, non-linear problems, neurons are stacked into layers.

- Input Layer: Receives the raw data.

- Hidden Layers: Intermediate layers where computation and feature extraction occur. "Deep Learning" refers to networks with multiple hidden layers.

- Output Layer: Produces the final prediction or classification.

- Backpropagation: The training algorithm. It calculates the error (loss) at the output and propagates it backward through the network to update weights and minimize error.

2. Advanced Neural Architectures

Different types of data require specialized network architectures.

Convolutional Neural Networks (CNN)

Primarily used for image processing and computer vision, though applicable to NLP.

- Concept: Uses "filters" (kernels) to scan inputs and detect spatial hierarchies of features (edges -> shapes -> objects).

- Key Layers: Convolutional Layer (feature extraction), Pooling Layer (dimensionality reduction), Fully Connected Layer (classification).

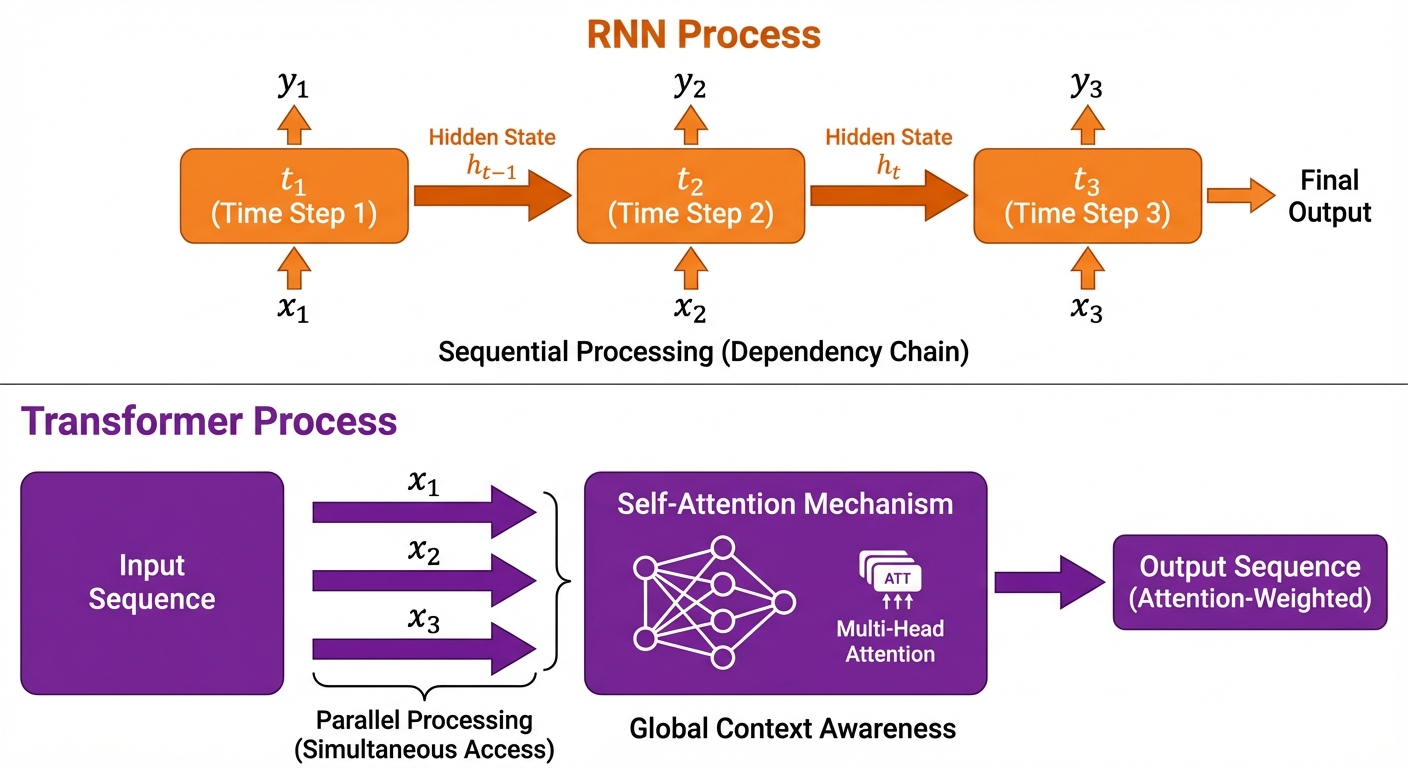

Recurrent Neural Networks (RNN)

Designed for sequential data (time series, text, audio).

- Memory: Unlike Feed-forward networks (MLP), RNNs have an internal loop. The output from the previous step is fed as input to the current step.

- Use Case: Predicting the next word in a sentence based on previous words.

- Limitation: Vanishing Gradient Problem—RNNs struggle to remember information from long sequences (e.g., the beginning of a long paragraph).

Transformer Architecture

The foundation of modern NLP (introduced in the paper "Attention Is All You Need", 2017).

- Parallelism: Unlike RNNs, Transformers process the entire sequence of data simultaneously, allowing for massive parallelization and faster training.

- Encoder-Decoder Structure:

- Encoder: Processes the input text to understand context.

- Decoder: Generates output text based on the encoder's understanding.

3. Introduction to Natural Language Processing (NLP)

NLP is a subfield of AI focused on the interaction between computers and human language. The goal is to read, decipher, understand, and make sense of human language in a valuable way.

NLP Phases

- Lexical Analysis: Analysis of structure and words (identifying paragraphs, sentences, words).

- Syntactic Analysis (Parsing): Analyzing grammar and sentence structure.

- Semantic Analysis: Determining the meaning of words and sentences.

- Discourse Integration: Understanding the sentence in the context of preceding sentences.

- Pragmatic Analysis: Deriving meaning from real-world knowledge and intent.

Core Components

Tokenization

The process of breaking down text into smaller units called tokens.

- Word Tokenization: "AI is great" ["AI", "is", "great"]

- Sub-word Tokenization: Used in modern models (BERT/GPT) to handle unknown words. "Playing" ["Play", "##ing"]

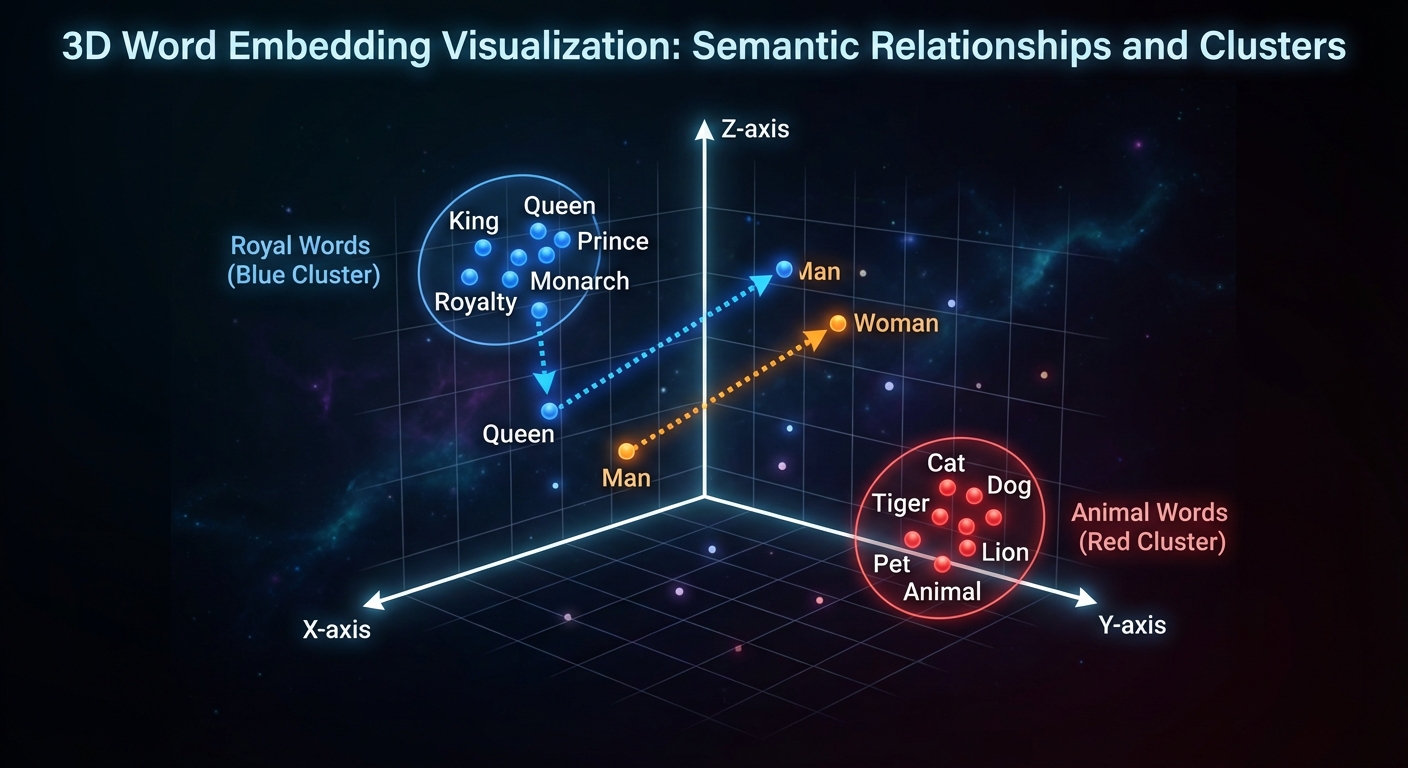

Embeddings

Converting tokens into continuous vector representations (numbers) where similar words have similar vector values.

- One-Hot Encoding (Old): Sparse, high-dimensional vectors (mostly zeros).

- Word Embeddings (New): Dense vectors (e.g., Word2Vec, GloVe). They capture semantic relationships.

- Analogy: Vector("King") - Vector("Man") + Vector("Woman") Vector("Queen").

4. Modern NLP and Language Models

Attention Mechanism

The breakthrough that allows models to focus on specific parts of the input sequence when generating output, regardless of the distance between words.

- Self-Attention: Allows a word to look at other words in the same sentence to determine its own context (e.g., resolving what "it" refers to in "The animal didn't cross the street because it was too tired").

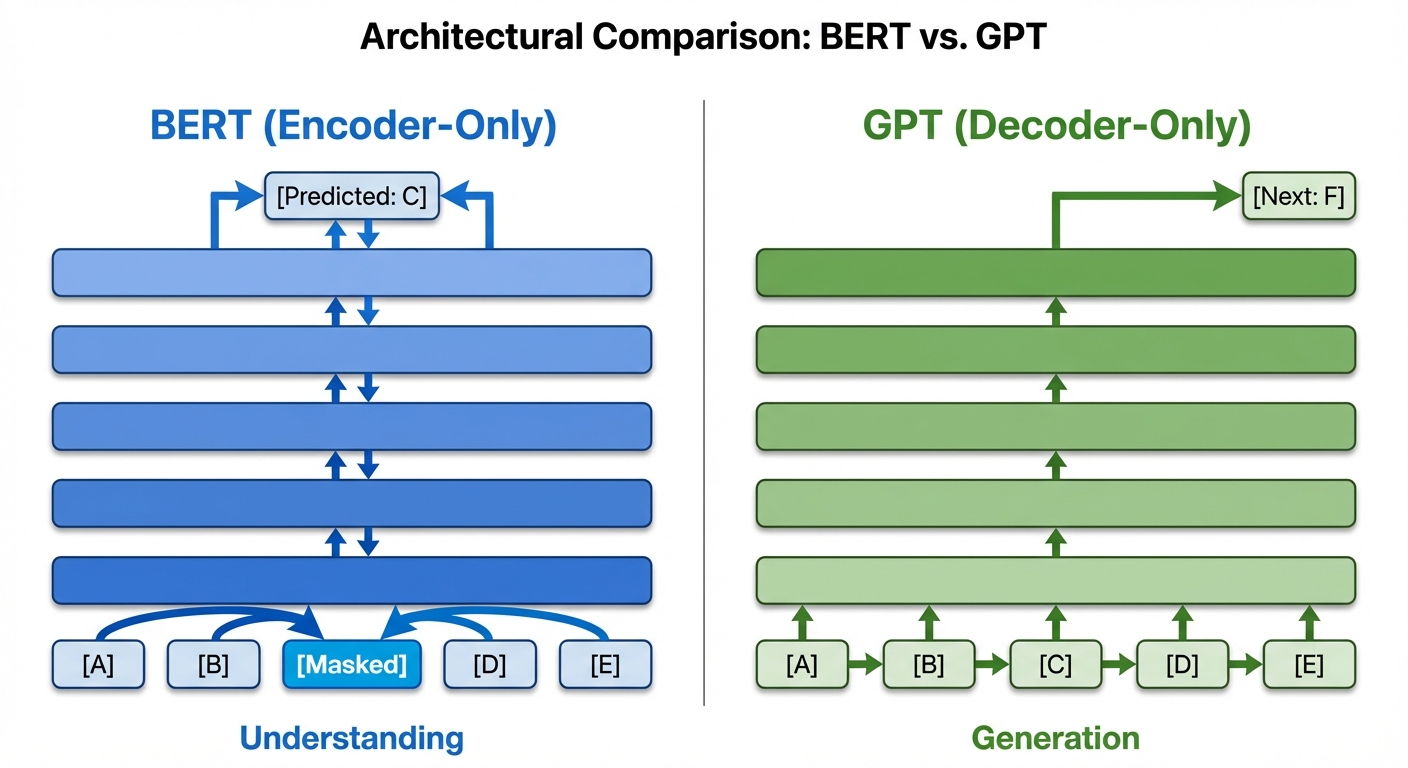

Large Language Models (LLMs)

BERT (Bidirectional Encoder Representations from Transformers)

- Architecture: Encoder-only.

- Mechanism: It looks at text bidirectionally (left-to-right and right-to-left) simultaneously.

- Training Objective:

- Masked LM: Hides 15% of words and asks the model to guess them.

- Next Sentence Prediction: Predicts if sentence B naturally follows sentence A.

- Application: Excellent for understanding, classification, and question answering.

GPT (Generative Pre-trained Transformer)

- Architecture: Decoder-only.

- Mechanism: Autoregressive (predicts the next token based on previous tokens).

- Training Objective: Next-token prediction.

- Application: Text generation, code generation, creative writing.

5. Applications and Use Cases

Building Chatbots and Digital Assistants

Modern chatbots move beyond keyword matching (Rule-based) to Contextual AI.

- Pipeline: User Input ASR (Speech to Text) NLP (Intent Recognition + Entity Extraction) Dialog Management NLG (Natural Language Generation) Output.

- RAG (Retrieval-Augmented Generation): Connecting LLMs to external private databases to answer questions factually without hallucinating.

Key NLP Use Cases

1. Sentiment Analysis

Determining the emotional tone behind words.

- Type: Classification task.

- Example: Analyzing tweets to see if users are happy or angry about a product launch.

- Output: Positive, Negative, Neutral.

2. Machine Translation

Translating text from one language to another automatically.

- Model: Sequence-to-Sequence (Seq2Seq) Transformers.

- Example: Google Translate, DeepL.

3. Text Summarization

Reducing a text document to a short version while preserving key information.

- Extractive Summarization: Selects and stitches together important sentences from the original text (like a highlighter).

- Abstractive Summarization: Generates entirely new sentences that capture the essence of the text (like a human explanation).